Abstract

Introduction

Artificial Intelligence (AI) software/platforms are becoming increasingly used by students, educators, researchers, and healthcare professionals in medical education. The objective of our study was to examine the accuracy of results when queries regarding pharmacology in medical education were entered into various AI platforms. Our curriculum involves case-based learning (CBL) modeled according to constructivist principles of problem-based learning (PBL), where learners are required to employ critical thinking skills and become self-directed. Since students use AI for understanding basic foundational sciences and for self-assessment, our goals were to determine how usable and trustworthy the results were when different platforms were queried using the same questions.

Methods

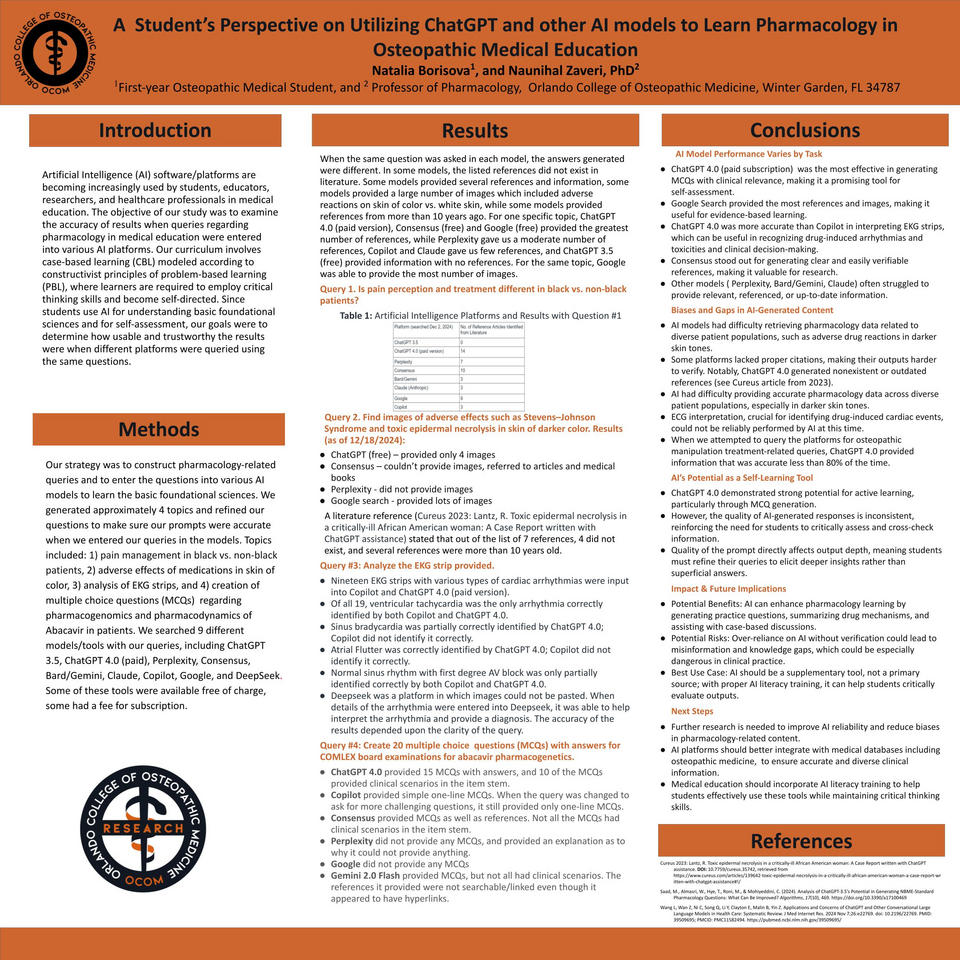

Our strategy was to construct pharmacology-related queries and to enter the questions into various AI models to learn the basic foundational sciences. We generated approximately 4 topics and refined our questions to make sure our prompts were accurate when we entered our queries in the models. Topics included: 1) pain management in black vs. non-black patients, 2) adverse effects of medications in skin of color, 3) analysis of EKG strips, and 4) creation of multiple choice questions (MCQs) regarding pharmacogenomics and pharmacodynamics of Abacavir in patients. We searched 9 different models/tools with our queries, including ChatGPT 3.5, ChatGPT 4.0 (paid), Perplexity, Consensus, Bard/Gemini, Claude, Copilot, Google, and DeepSeek. Some of these tools were available free of charge, some had a fee for subscription.

Results

When the same question was asked in each model, the answers generated were different. In some models, the listed references did not exist in literature. Some models provided several references and information, some models provided a large number of images which included adverse reactions on skin of color vs. white skin, while some models provided references from more than 10 years ago. For one specific topic, ChatGPT 4.0 (paid version), Consensus (free) and Google (free) provided the greatest number of references, while Perplexity gave us a moderate number of references, Copilot and Claude gave us few references, and ChatGPT 3.5 (free) provided information with no references. For the same topic, Google was able to provide the most number of images.

Conclusion

ChatGPT 4.0 (paid subscription) was the most effective in generating MCQs with clinical relevance, making it a promising tool for self-assessment. Google Search provided the most references and images, making it useful for evidence-based learning. ChatGPT 4.0 was more accurate than Copilot in interpreting EKG strips, which can be useful in recognizing drug-induced arrhythmias and toxicities and clinical decision-making. Consensus stood out for generating clear and easily verifiable references, making it valuable for research. Other models ( Perplexity, Bard/Gemini, Claude) often struggled to provide relevant, referenced, or up-to-date information. AI models had difficulty retrieving pharmacology data related to diverse patient populations, such as adverse drug reactions in darker skin tones. Some platforms lacked proper citations, making their outputs harder to verify. Notably, ChatGPT 4.0 generated nonexistent or outdated references. AI had difficulty providing accurate pharmacology data across diverse patient populations, especially in darker skin tones.