Abstract

Introduction: Cricothyroidotomy is a procedure whereby the airways are accessed by performing an incision and tube placement to ensure proper oxygenation and ventilation. The use of cricothyroidotomy has increased recently due to the presence of COVID-19. Despite its recent increased use, training to ensure proper skills and decision-making remains challenging since traditional teaching relies on videos, slides, and multimedia that need more hands-on practice. Additionally, training with advanced simulators lacks cost-effectiveness which introduces entry barriers.

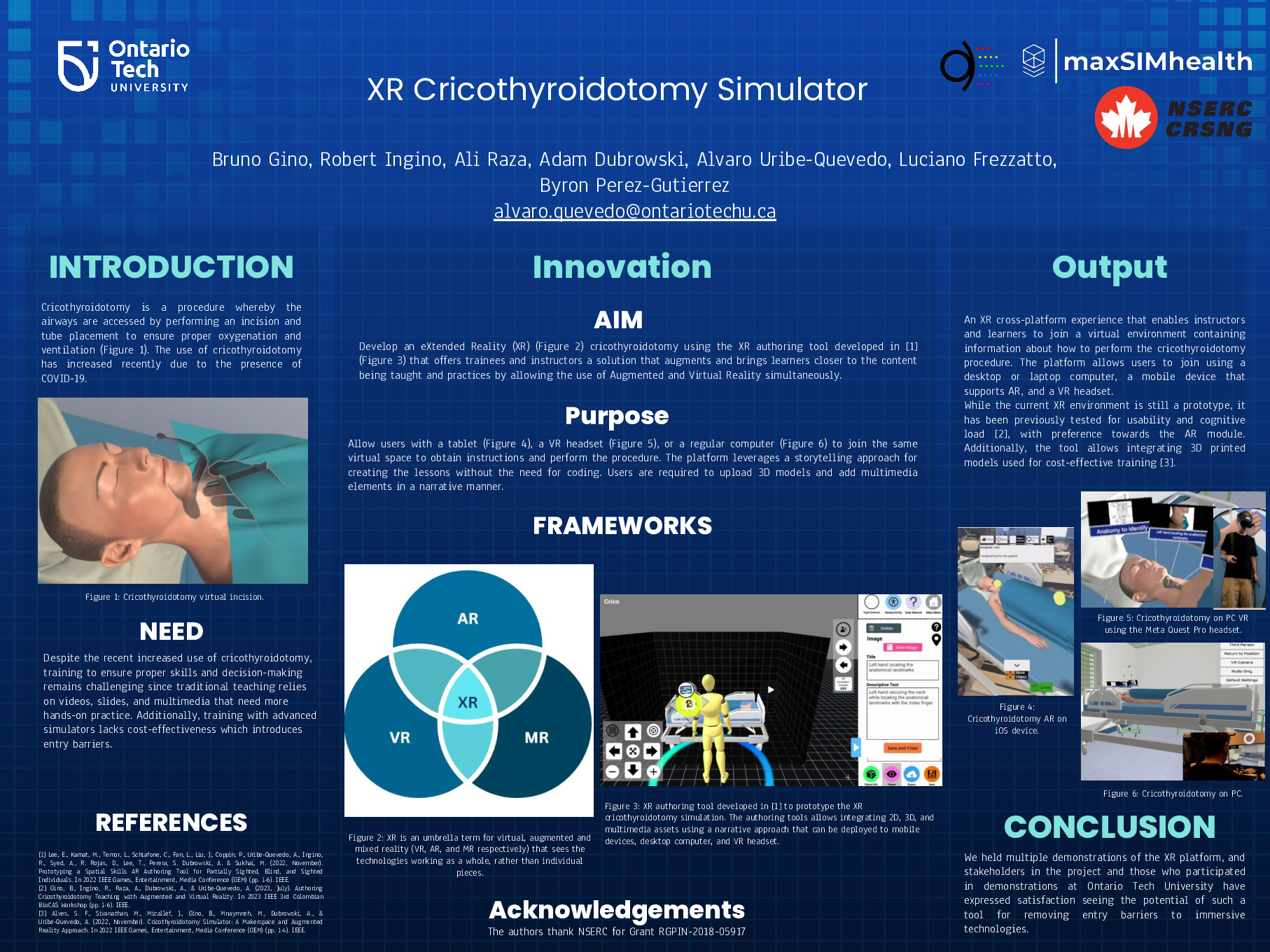

Methods: The development of an eXtended Reality (XR) cricothyroidotomy tool offers trainees and instructors a solution that augments and brings learners closer to the content being taught and practices by allowing the use of Augmented and Virtual Reality simultaneously. Such a solution allows a user with a tablet, a VR headset, and a regular computer to join the same virtual space to obtain instructions and perform the procedure. The platform leverages a storytelling approach for creating the lessons without the need for coding. Users are required to upload 3D models and add multimedia elements in a narrative manner.

Results: An XR cross-platform that enables instructors and learners to join a virtual environment containing information about how to perform the cricothyroidotomy procedure. The platform allows users to join using a desktop or laptop computer, a mobile device that supports AR, and a VR headset. Users can collaborate, navigate, and discuss the procedures while also engaging in virtual hands-on manipulation of objects within the scene. We held multiple demonstrations of the XR platform, and stakeholders in the project and those who participated in demonstrations at Ontario Tech University have expressed satisfaction with the development of such a scenario.

Discussion: While the current XR environment is still a prototype, enabling interactions across different platforms and levels of immersion presents an opportunity to remove technology barriers and widen user access. Future work will focus on polishing the interactions with the 3D objects to better represent surgical actions such as incisions, needle insertion, and others that will increase the experience realism. Additionally, articulation with custom-made user interfaces and existing 3D-printed simulators will be explored in conjunction with haptics to improve virtual hands-on training.