Abstract

Introduction

Research with robots to assist seniors and people living with Developmental Disabilities (DD) faced disruption due to restricted access to long-term care facilities (LTCFs) during the COVID-19 pandemic. It hindered data collection for human-robot interactions (HRI) and fall detection. An alternative is the use of simulation for (i) fine-tuning the robot’s navigation, and (ii) generating synthetic data to overcome the challenges of collecting data and the privacy and ethical issues on sharing of data sets. Herein, we present a virtual reality (VR) simulator for testing and evaluating robot behaviour when navigating a virtual environment, detecting virtual avatars, and generating synthetic data.

Aether, the Socially Assistive Robot

Aether is a robot designed to help seniors and people living with DD to achieve a higher degree of independence and to assist caregivers by alleviating the burden of care. It uses the YZ-01C robot base, a RealSense D455 camera, a PaceCat LDS-50C-2 lidar, a ReSpeaker microphone, and an embedded monitor.

VR Simulator

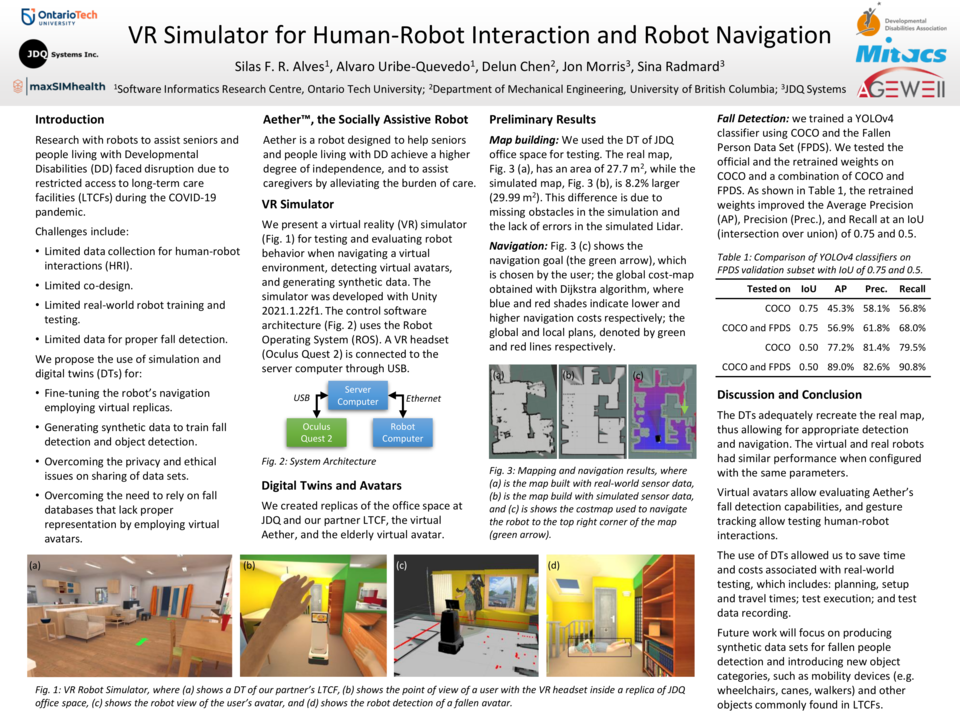

The simulator was developed with Unity 2021.1.22f1. The control software architecture uses the Robot Operating System (ROS). The simulator runs on a Lenovo 81SX000KUS with Microsoft Windows 10 (server computer), and ROS runs on an Intel NUC10i7FNH with Ubuntu 18.04 (robot computer). The communication between both machines is achieved through Gigabit Ethernet. A VR headset (Oculus Quest 2) is connected to the server computer through USB.

Digital Twins (DTs) and Avatars

We created replicas of the office space at JDQ and our partner LTCF, the virtual Aether, and the elderly virtual avatar. The avatar movement uses (i) predefined animations obtained from Mixamo, and (ii) puppeteering employing a VR headset and controllers mapped to the virtual avatar.

Preliminary Results

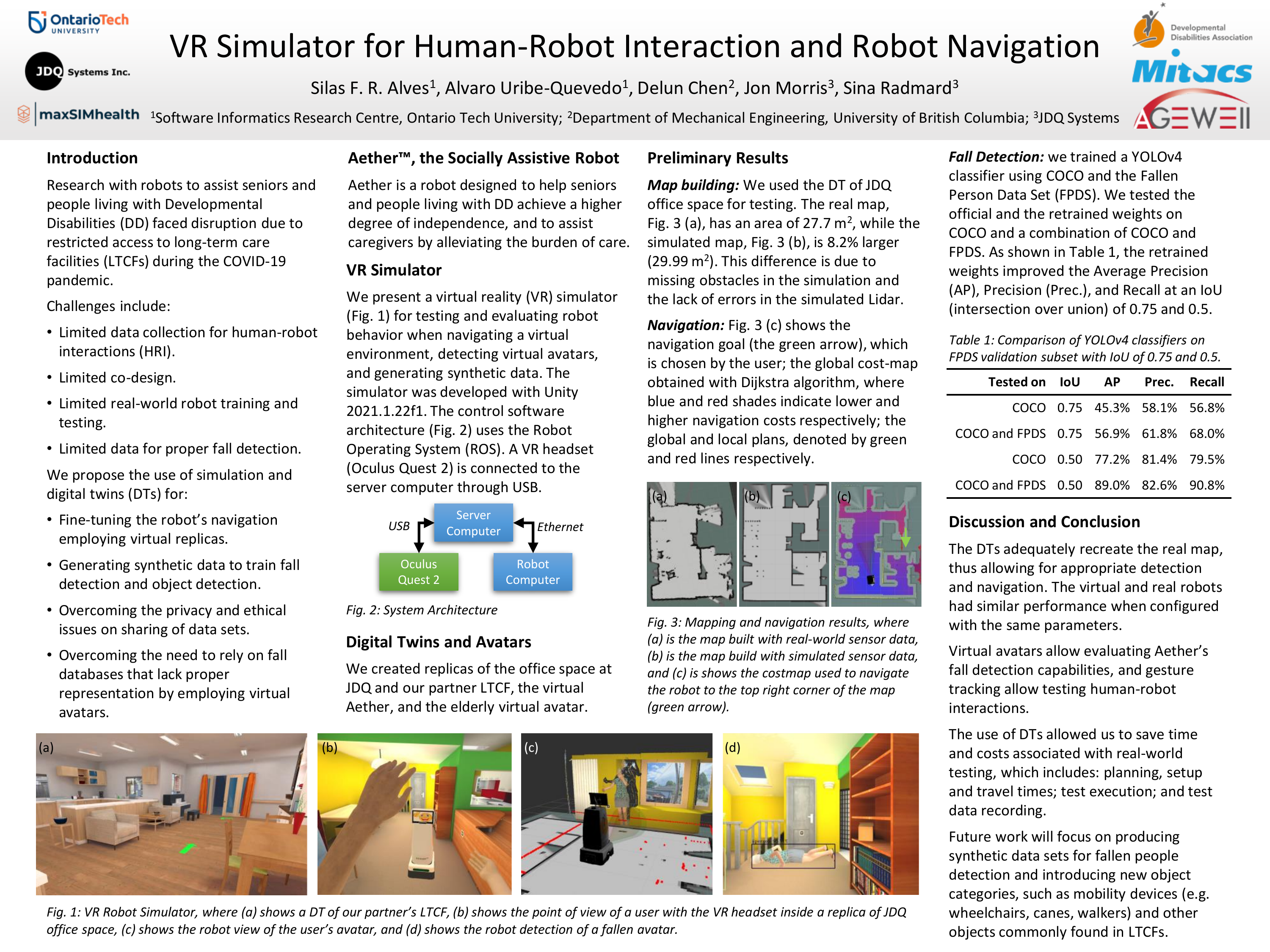

Map building: we used the DT of JDQ office space for testing. The real map has an area of 27.7 m2, while the simulated map is 8.2% larger (29.99 m2). This difference is due to missing obstacles in the simulation and the lack of errors in the simulated Lidar.

Navigation: the navigation goal is chosen by the user. The global cost-map is obtained with Dijkstra's algorithm, which is used to generate the global and local plans.

Fall Detection: we trained a YOLOv4 classifier using COCO and the Fallen Person Data Set (FPDS). We tested the official and the retrained weights on COCO and a combination of COCO and FPDS. The retrained weights improved the Average Precision (AP), Precision (Prec.), and Recall at an IoU(intersection over union) of 0.75 and 0.5.

Discussion and Conclusion

The DTs adequately recreate the real map, thus allowing for appropriate detection and navigation. The virtual and real robots had similar performance when configured with the same parameters. The use of a virtual avatar walking and falling will speed up the evaluation of Aether’sfall-detection behaviour. Puppeteering allows testing HRI with more dynamic and diverse movements than those provided by animations. We saved time and cost associated with real-world testing, which includes: planning, setup and travel times; test execution; and test data recording.

Future work will focus on producing synthetic data sets for fallen people detection and introducing new object categories, such as mobility devices (e.g. wheelchairs, canes, walkers) and other objects commonly found in LTCFs.