Abstract

Objective: This study sought to evaluate whether the presence of proctoring software and various test settings affect student performance in an undergraduate health science program.

Method: In this cross-sectional study, Health Science faculty completed a 7-item survey using Google Forms. Descriptive statistics were performed on overall course averages, the use of proctoring software (e.g. Respondus Lockdown Browser, ProctorTrack) and the use of various test settings.

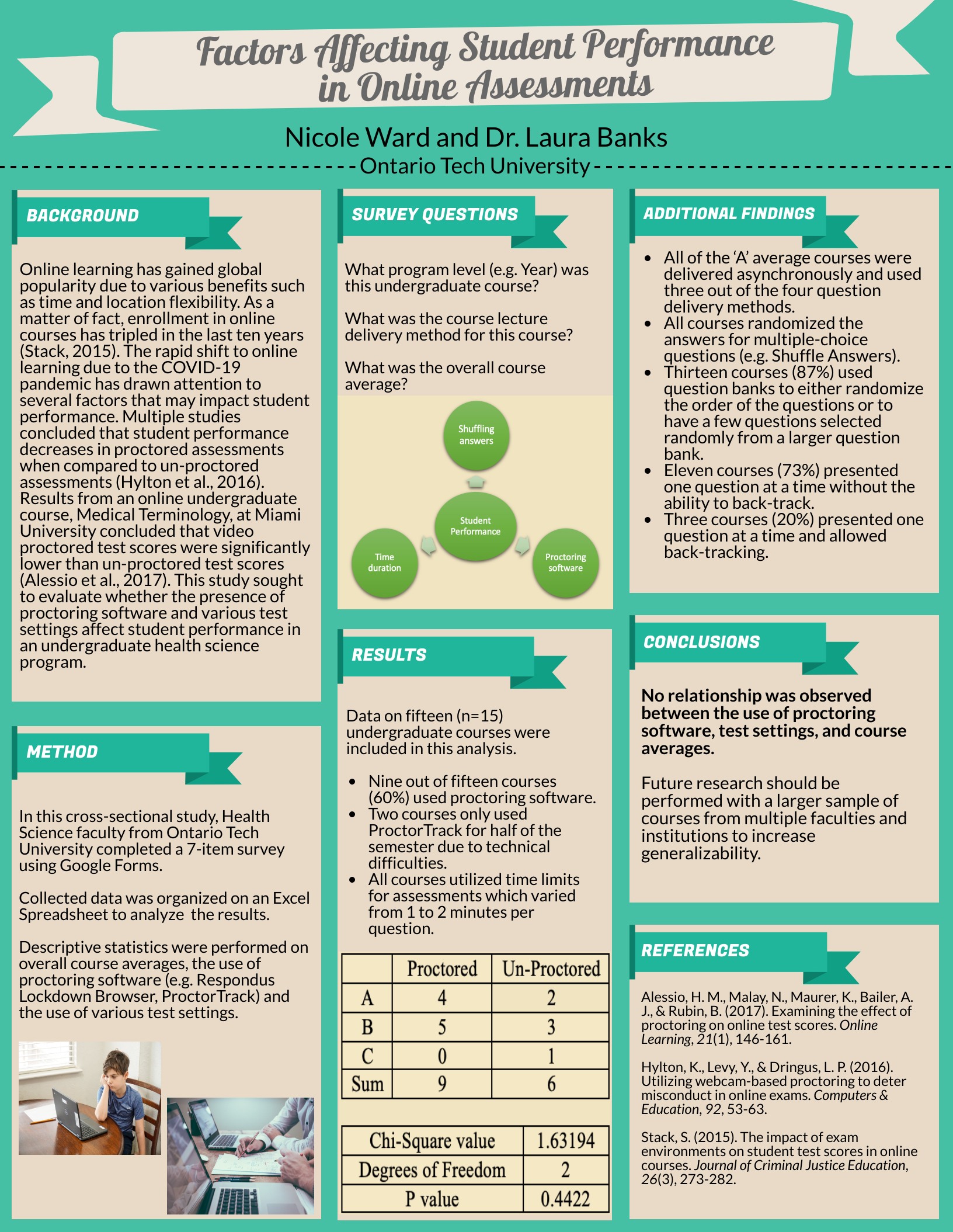

Results: Data on fifteen (n=15) undergraduate courses were included in this analysis. Nine out of fifteen courses (60%) used proctoring software. All courses randomized the answers for multiple-choice questions. Thirteen courses (87%) used question banks to either randomize the order of the questions or to have a few questions selected randomly from a larger question bank. Eleven courses (73%) presented one question at a time without the ability to back-track while three courses (20%) presented one question at a time and allowed back-tracking. All courses utilized time limits for assessments which varied from 1 to 2 minutes per question. The course averages with proctoring were four ‘A’s and five ‘B’s. The course averages without proctoring were two ‘A’s, three ‘B’s and one ‘C’. All of the ‘A’ average courses were delivered asynchronously and used three out of the four question delivery methods.

Conclusion: No relationship was observed between the use of proctoring software, test settings, and course averages. Future research should be performed with a larger sample of courses from multiple faculties and institutions to increase generalizability.